GAMES203-01 Introduction & Scanning 课程介绍、扫描方法

Introduction

Generally when we trying to turn a real world object into digital 3d object and further real world 3d-printing object, we follow the geometry processing pipeline: reconstruction from real object, process and analysis the object, and print the edited object into real 3d project.

The topic of this course, 3d reconstruction and understanding, lies in the area of 3d vision, focusing on two aims: Recovering the Underlying 3D structures from images(Reconstruction) and Analysis the 3D structures to Understand 3D scenes(Understanding). Addtionally, Different Representations of 3D data will also be covered in the course.

Reconstruction

Tasks: From RGB/RGBD images to 3D structures.

Scanning

Scan registeration

Surface reconstruction

Pose Estimation and Structure-from-motion

Multi-view stereo

Map synchronization

Recommended reading: An Invitation to 3-D Vision: From Images to Geometric Models

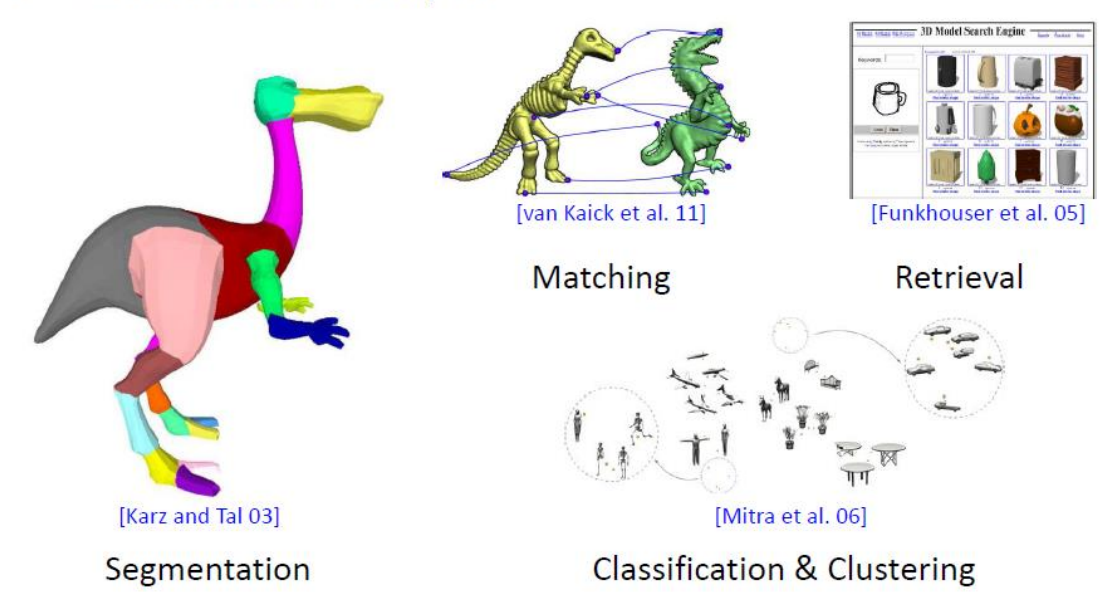

Understanding

Tasks: Classification, Segmentation, Detection (same as 2d vision)

Design algorithms to extract sematic information from 3d shapes.

Representation and Transformation of 3D data

Representation

Volumetric(Voxels)

Mesh

Point cloud

Implicit surface

Light Field Representation

Multi-view

Scene-graph

Semantic segments

Conversion between representations:

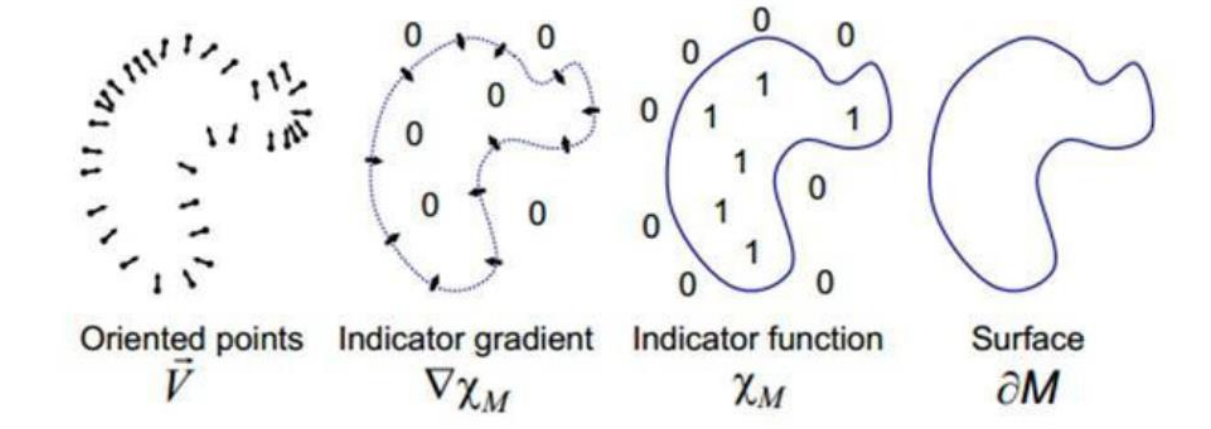

Implicit -> Mesh: Marching Cube

Pointcloud -> Implicit -> Mesh [Kazhdan et al. 06]

Recommended reading:

Point-Based Graphics

Polygon Mesh Processing

Related Math Areas

Geometry: Linear Algebra, Perspective geometry, Differential Geometry & Topology

Numerical Optimization: Linear/Nonlinear, Convex/Nonconvex, Continuous/Discrete, Deterministic/Stochastic …

Assignment & Projects

dense reconstruction, primitive extraction

project: omitted.

Scanning

Depth Sensing

Contact Method: directly mechanical contact(CMM, jointed arm), Inertial(gyroscope&accelerometer),ultrasonic & magnetic trackers.

- jointed arms(touch probes) with angular encoders, return position and orientation when touch.

Transmissive: like MRIs.

Reflective

Non-optical: Radar & Sonar

Optical

Passive: Shape from (stereo, motion, shading, texture, focus)

Active: Time of Flight & Triangulaton

Only dig into two active method.

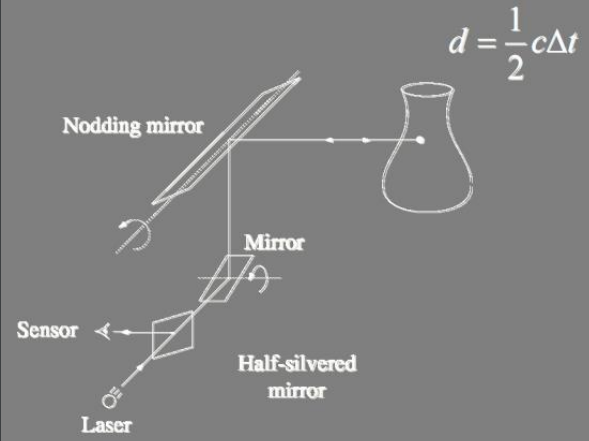

Time-Of-Flight

Send out pulse of light (usually laser), time how long it takes to return.

Direct, but worse precision for short distance (timing error?)

Triangulation(三角定位)

Given a object, how to measure a point if we have two light?

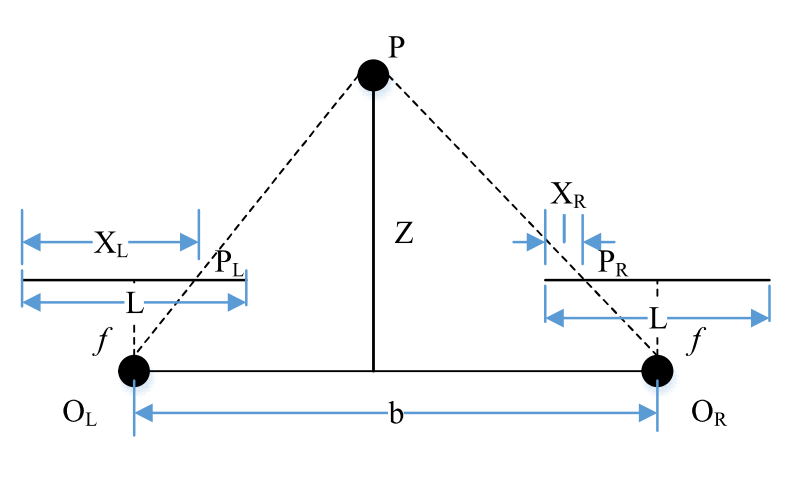

Basic: Parallax Method(视差法)

Parallax d = |XL − XR|

By moving OL to OR along OLOR, it can be easily seen that $Z = f \cdot \frac{b}{d}$

Kinect use two 3D depth sensors and one RGB cam, combined sturctured light mentioned below and depth from focus/stereo.

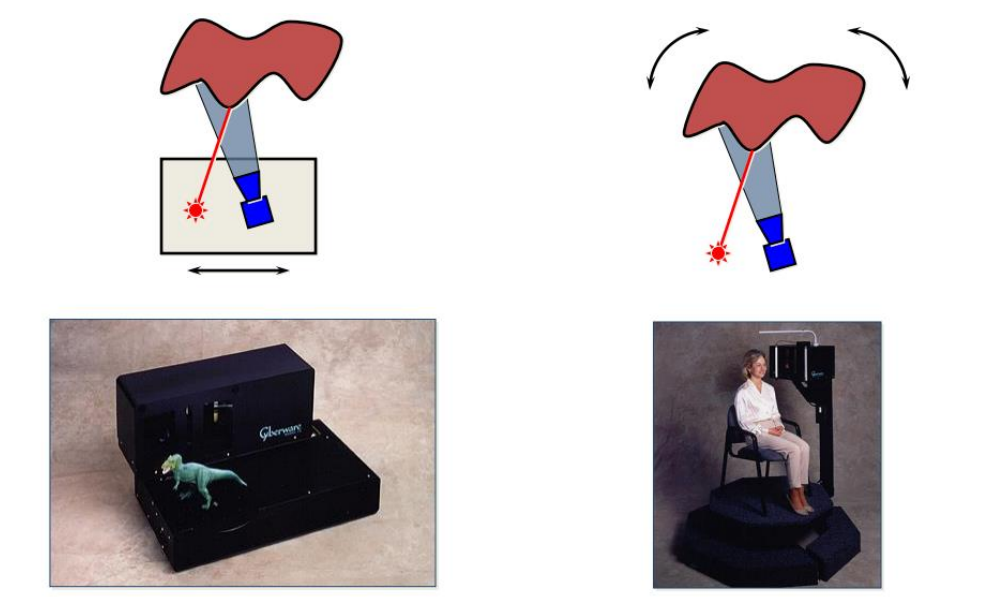

Move to measure more points

When we measure objects, the light source act as OL, the camera act as OR, we get XL from light direction according to light patterns, get XR from pixel caught that light pattern.

Move! To get a comprehensive measurement, we need to move the cam and light source, for easier measurement, most scanners mount camera and light source rigidly , move them together as a unit.

3D Cases: Structured Light

To handle complex 3d surfaces, we could project a stripe/grid rather a light dot. (measure one by one is so slow compare to structured light.)

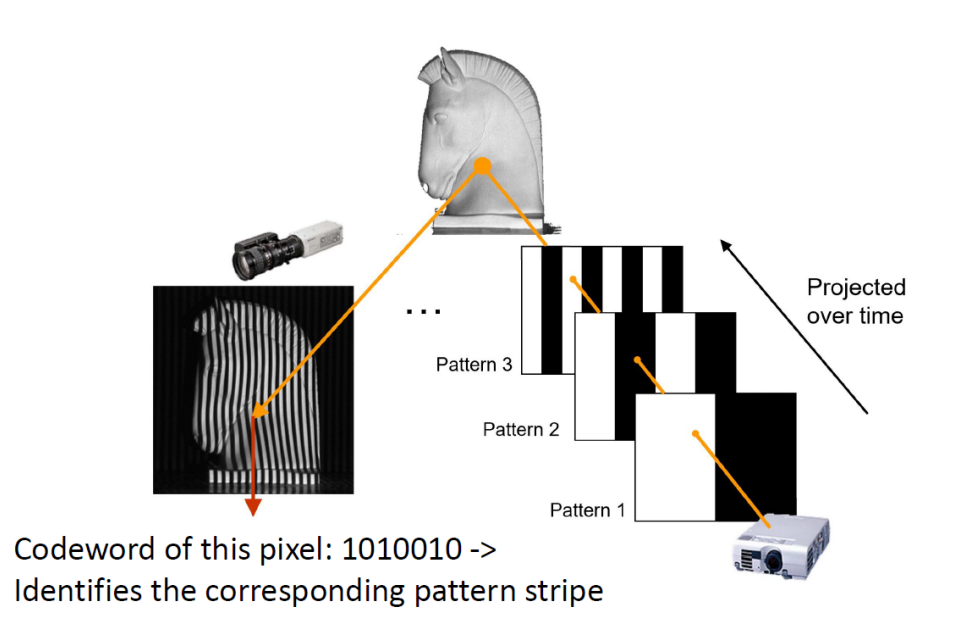

Then we have the Structured Light(结构光/结构化光线). its general

principle is to project a known pattern onto the scene and infer depth

from the deformation of that pattern.

The light pattern can be time-encoded: assign each stripe a unique illumination code over time so that we can identify each stripe. for example, this is a binary encode.

(Notice that all points on the each stripe can be measure at once since a line according to a light plane cast by light source and pixel ray intersect with it at only one point.)

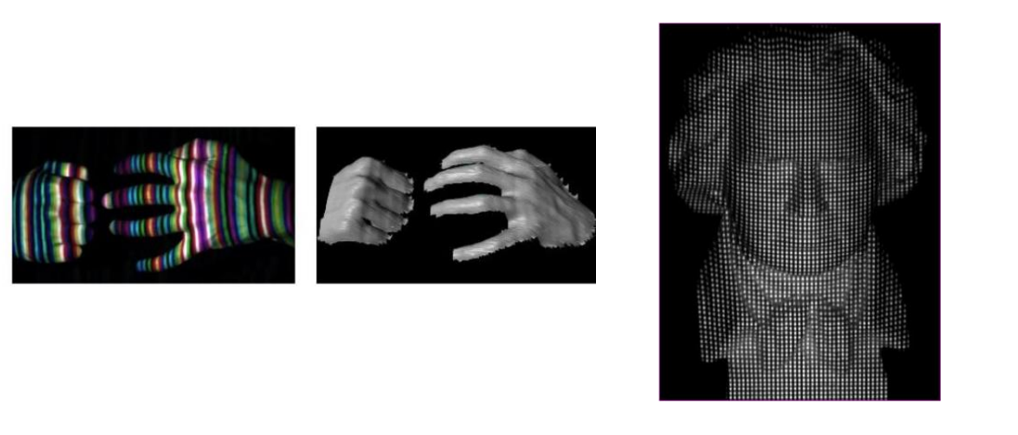

or space-coded: project a grid or stripes with specific patterns or pattern of dots. These methods relies not only a point’s measurement but also its neighborhood. So its pattern need to be carefully designed to avoid collision.

Compare between time-coded and space-coded structure light

Time

each point can be determined by appearance of itself. So time-coded structured light has high precision.

rely on multiple shots, not suitable for dynamic scenes.

Space

only need one shot, so suitable for dynamic scenes.

Rely on one points neighborhood. So pattern design matters and scene need to be continuous (and not occluded) with only one object.

Further reference for structured light: https://sharzy.in/assets/doc/structured-light.pdf

注

2023.11.16&17

这门课下面的评论似乎表明它的讲课质量堪忧,需要自行补充学习提及的论文和书籍…

一两天磨一节看看能不能学的动吧,不能的话去学SLAM十四讲或者参考别的论文入门先也可以。